Floors and Boundaries and GeoLocation, Oh My

Floors and Boundaries and GeoLocation, Oh My

By Gary Angel

|March 4, 2019

One of the real pain-points with electronic-based geo-location is separating out pass-by traffic from shoppers IN the store and handling multi-floor locations. This seems trivial, but it isn’t. It’s a huge deal. And even a good model for x,y location won’t really solve this problem.

Wait. What did I just say? Knowing the x,y coordinate of the shopper won’t solve the in-store vs out-of-store shopper problem? How is that possible?

To understand why that’s so (very much to our initial surprise), let’s review how geo-location modelling works. In the last few posts, I detailed our process for fingerprinting stores. You can read how we generate supervised ML training data with a calibration process that involves a lot of time walking the store with a bunch of madly pinging phones. Then we take that training data and enhance and expand it with a data factory designed to create even more grist for the ML mill. Finally, we put all that data together into an aggregation that gives an ML model a chance to decide exactly where someone is in the store.

Essentially, we fingerprint every location inside the store. Then we take whatever signals are received at a bunch of sensors and match it to the closest fingerprint with ML.

Can you see where the problem lies?

When you run an ML model on data, it doesn’t have a setting for “I can’t classify that”. It classifies everything. Yes, most classification models do return confidence estimates for each classification, but there is – by definition – one that’s going to be highest. And regression models (which we use for X,Y coordinate generation) don’t even do that.

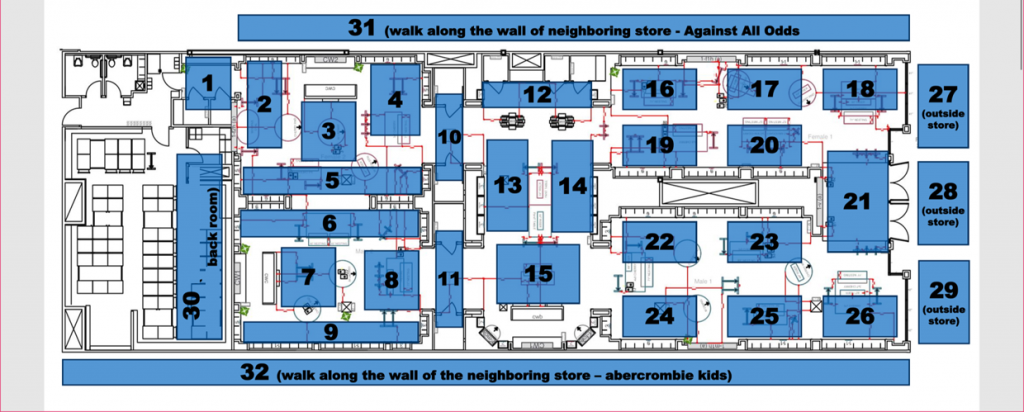

So if a shopper is outside the front of the store, a classification model based on the above calibration plan will probably assign them to section 18, 21 or 26. And an X,Y regressor may well place them somewhere at the far right edge of the store. A shopper next door (above) will likely be placed somewhere near the top of the store, but might be in section 1,2,3,4,10, 12, 16,17, or 18.

It’s a mess.

Some vendors rely on the fact that signals will be blocked by walls and just assume that this problem isn’t going to be serious. I’m here to tell you that’s not true. We routinely see electronic systems pick up WAY more signals than door-counters pick up shoppers. Some vendors will tune the sensitivity of the sensors based to get a shopper count that’s kind of close to your door count. But that isn’t a good approach at all. First, electronics shouldn’t be tracking at your door count level. Not every shopper has a smart-phone with their Wifi radio enabled and classifiable. So the number shouldn’t look close to your door count. Second, every sensor in the store will have different levels of outside noise and that noise will vary from pretty powerful to hardly hearable. There is no one setting on a sensor that will work to threshold out traffic that’s outside the store. So you either drop real shopper data or include shoppers from outside the store.

When we tried these methods, we inevitably saw strong traffic hot spots in the front of the store. Often in places that made no sense from a shopping standpoint. It just didn’t work.

The right approach turned out to be extending the ML fingerprinting. In our early attempts, we did something like this:

Now, we could build a classifier that identified shoppers walking past the front-of-store. In fact, by aggregating all shoppers in areas 27-29, we could build a binary classifier for whether the shopper was inside or outside the store.

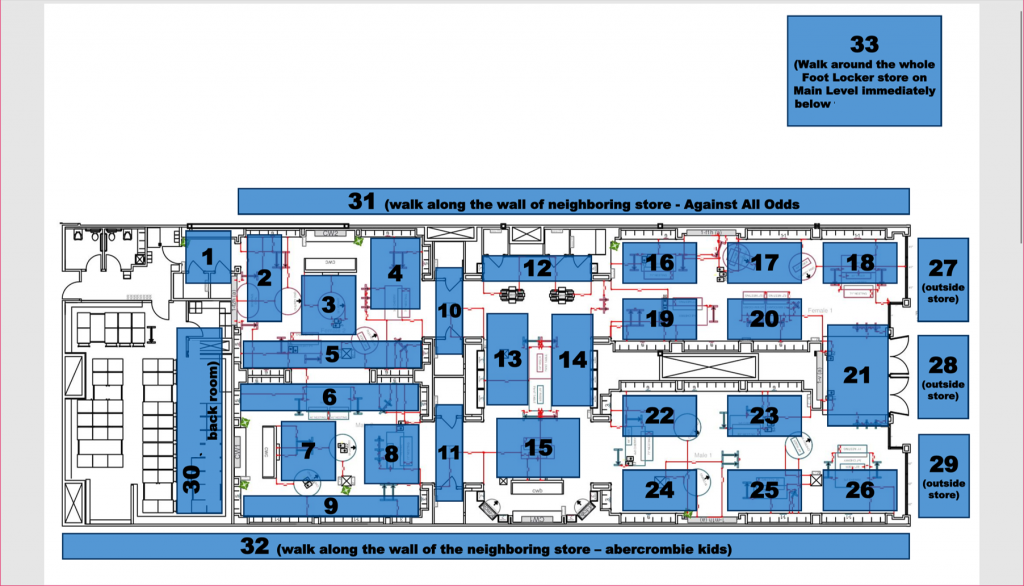

This approach worked really well for front-of-store pass-by traffic. But we soon realized that it wasn’t enough. Wifi radio signals DO make it through the walls of the store. And we continued to see odd traffic patterns and hot-spots in areas along the outer edges of mall stores. We even had one store where we saw a dense hotspot and some very odd busy times in the far back of the store. Inspection of the location revealed that there was a pizza place behind the store!

The solution is pretty obvious. We extended the fingerprinting to the entire boundary of the store:

We thought we had the problem licked until the first time we tackled a multi-floor department store. When we setup electronic detection in a multi-floor store, each floor becomes a separate reporting unit – almost like a store unto itself. But our DM1 platform is really powerful. So powerful that it tends to expose bad data in ways that often make our life difficult. In this case, when we ran path reports we could see that people were constantly hopping back and forth between floors in a way that just didn’t make sense. We’d see common paths that went from Floor 2 to Floor 3 to Floor 2 to Floor 3 to Floor 4 for Floor 2 to Floor 3 – even in a visit that measured less than 5 minutes. We knew these paths weren’t reasonable or right, and when we put the data under a microscope what we found was that ALMOST EVERY PROBE from a smartphone was picked up by multiple sensors on multiple floors.

Ouch.

We were astonished by the degree to which floors and ceilings DID NOT block signal detection. There were common cases where we picked up probes 2 or 3 floors up. Almost 30% of probes were picked up on 3 floors or more. Almost 90% of probes were picked up on 2 floors. What’s especially significant about this problem is that it CAN’T be solved by geometric methods of trilateration. To some extent, our challenges with things like front-of-store pass-by traffic were artifacts of the ML approach. Proper geometric trilateration would locate at least some devices outside the store. But trilateration is fundamentally 2 dimensional. It won’t detect that a device is above or below the sensors. Nor was it nearly as simple as just picking the sensor with the highest detected power as having the correct floor. Our analysis of the data indicated that proper floor identification was almost impossible with simple rule-based logic.

For a department store where we own the collection, there was an easy solution for this. We took our ML data and started by building a floor model. The floor model works by combining all the floors into a single store and consolidating sensor readings for a device. Since it knows which floors sensors are on during the calibration, our existing training data could be used.

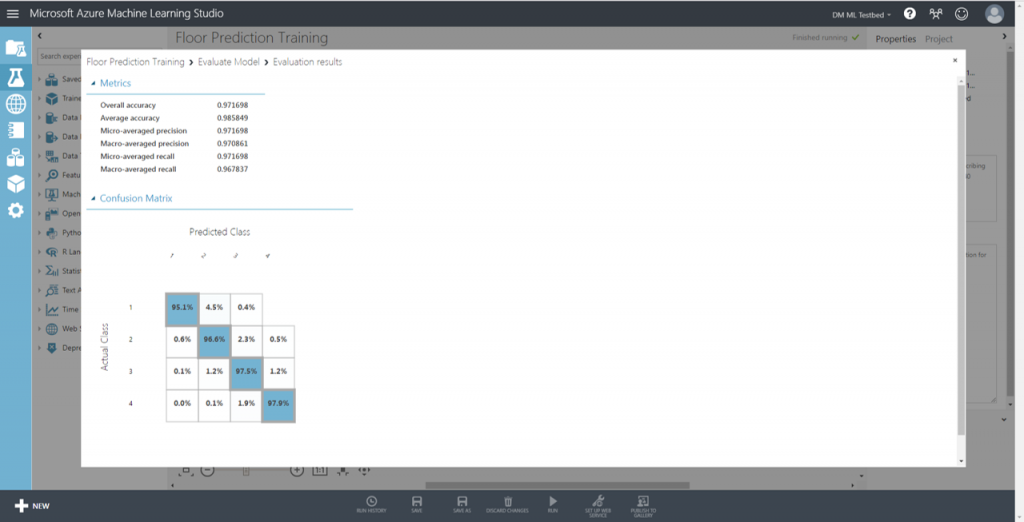

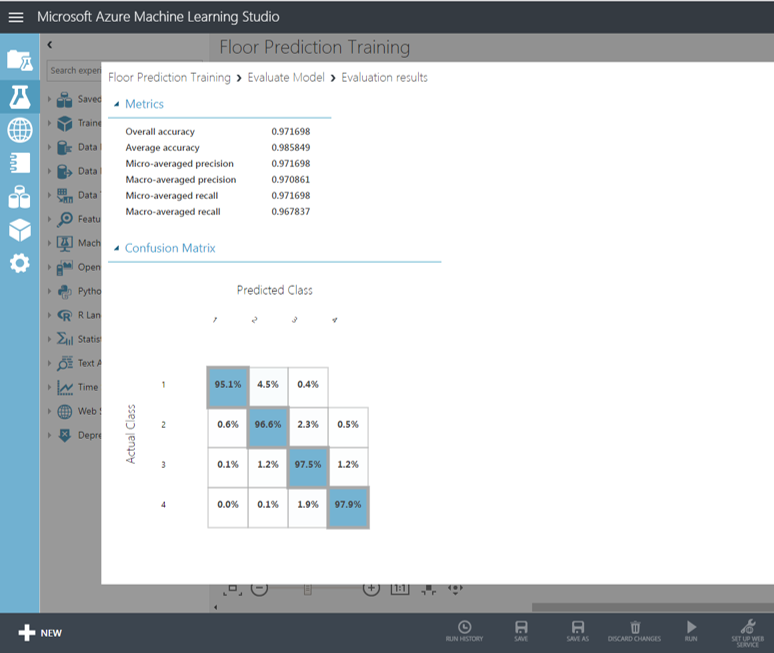

We commonly use a one-vs-all multiclass for this model, and the results are really, really accurate. I’ve attached an actual real-world confusion matrix from a department store floor model below:

As you can see, it gets the floor right in the holdout set more than 97% of the time and some of our models have done better than 99%. We further enhance this by identifying floor-hopping behavioral patterns in a visit and eliminating them from the data.

But this experience of floor ghosting convinced us that the problem was probably more severe in mall stores than we’d accounted for. So we once again (and for a final time) expanded our ML calibration process:

These days, we not only calibrate the store, the front outside, the adjacent stores, but we also calibrate the floor above or below (or both). These last calibrations areas are much less detailed and take a lot less time – but they provide a highly accurate way for us to create a full-featured out-of-bounds model BEFORE we assign Section or X,Y coordinate.

The result is a vastly more accurate interpretation of the electronic geo-location data.

The extent to which boundary and floor issues corrupt electronic geo-location data came as a rude surprise to us. And it’s a problem that electronic vendors aren’t too open about. But it turns out that by extending our calibration techniques it’s a problem that can be solved by machine learning.